The adoption of AI search engines and assistants, such as ChatGPT, Perplexity, and Gemini, is growing steadily in companies of all kinds, boosting productivity and real-time content generation. However, according to Cisco, 97% of organizations feel pressure to integrate AI, and 68% admit they are unprepared for its security risks.

What is privacy in artificial intelligence?

Privacy in AI consists of ensuring that personal and corporate data used in search engines and intelligent assistants is collected, processed, and stored securely and in accordance with current regulations.

Under the General Data Protection Regulation (GDPR), all identifiable information must be treated lawfully, transparently, and minimally. In addition, data subjects have the right to access, rectify, or delete their data even when cloud-based AI models are used.

The principles of privacy in artificial intelligence

To protect data in AI tools, it is advisable to rely on a set of principles that guide both the design and ongoing use of these solutions:

• Minimization: only send the AI the data that is strictly necessary.

• Anonymization and pseudonymization: replace direct identifiers with codes or keys.

• Transparency: inform users and customers about what information is shared and for what purposes.

• Proactive accountability: document processes, record consents, and conduct regular audits.

• Least privilege: grant each user or service only the permissions that are essential.

Why is protecting privacy in AI essential?

Protecting privacy in AI not only avoids penalties, but also strengthens reputation and builds trust among customers, partners, and employees. According to ENISA, 85% of AI-related breaches stem from insecure configurations at external providers, highlighting the danger of delegating without control. Adopting best practices from the outset improves corporate resilience to incidents.

The Spanish Data Protection Agency has imposed fines of up to 4% of global turnover for serious data protection breaches, underscoring the need for a proactive approach. Information encryption, access segmentation, and regular audits are key measures to minimize risks. In this way, your company demonstrates responsible data management and differentiates itself in the market.

How to apply good privacy practices in AI?

Below is a list of organizational and technical measures that every company should implement before exposing sensitive data to search engines or intelligent assistants:

1. Data governance:

• Create an internal AI committee with the CISO, DPO, and managers from each area.

• Define a catalog of authorized use cases and approve workflows.

2. Environment segmentation:

• Separate development, testing, and production to prevent accidental leaks.

• Apply the principle of “least privilege” in roles and permissions.

3. Minimization and anonymization:

• Send only the strictly necessary text or data fragment to the AI.

• Use anonymization tools before sharing files.

4. Supplier contracts and audits:

• Verify certifications (ISO 27001, ENS) and require semi-annual audits.

• Include confidentiality and data reversal clauses at the end of the service.

5. Continuous training:

• Train users in identifying malicious prompts and reporting protocols.

• Conduct quarterly incident response drills.

Benefits of protecting privacy in artificial intelligence

Implementing these best practices not only minimizes the risk of penalties and leaks, but also:

• Strengthens customer and partner trust by demonstrating a real commitment to security.

• Improves model quality by eliminating noise and unnecessary data.

• Facilitates audits and demonstrates compliance with regulatory bodies.

• Drives innovation by freeing teams from the burden of managing incidents and responding to crises.

AI applied to cybersecurity and AI security

AI security focuses on protecting your models, data, and execution environments through encryption, network segmentation, adversarial testing, and continuous monitoring to prevent system manipulation and information leaks.

In contrast, AI applied to cybersecurity uses those same analysis engines to monitor and correlate network events, endpoints, and logs, generating alerts and automatic responses that detect and mitigate attacks across the entire infrastructure.

Situations in which AI can expose your private information

1. Queries with customer data: when you ask the assistant to draft emails, generate reports, or summarize calls including names, emails, phone numbers, or any personal data, that data is transmitted and stored on the provider's servers, leaving it exposed to unwanted access.

2. Training with confidential documents: Some users upload contracts, payroll records, or medical files for the AI to draw conclusions or create summaries. If these files are not properly anonymized, the tool may retain sensitive information and reuse it in future responses.

3. Exposure of chat histories: By not deleting the conversation log, anyone with access to the account (or the same session) can review previous exchanges containing strategic data, trade secrets, or internal communications.

4. Sharing links or access paths: Including links to internal repositories, shared folders, or protected APIs in the prompt can allow AI to track and expose information from those resources, leading to potential massive data leaks.

5. Use of real data in test environments: Production data (customers, employees, suppliers) is often used to test AI integrations or plugins. If test environments are not properly isolated, that data can be leaked or indexed out of control.

Quick guide to secure configuration in search engines and smart assistants

To shield your interactions with AI, make these basic adjustments on any AI platform you use:

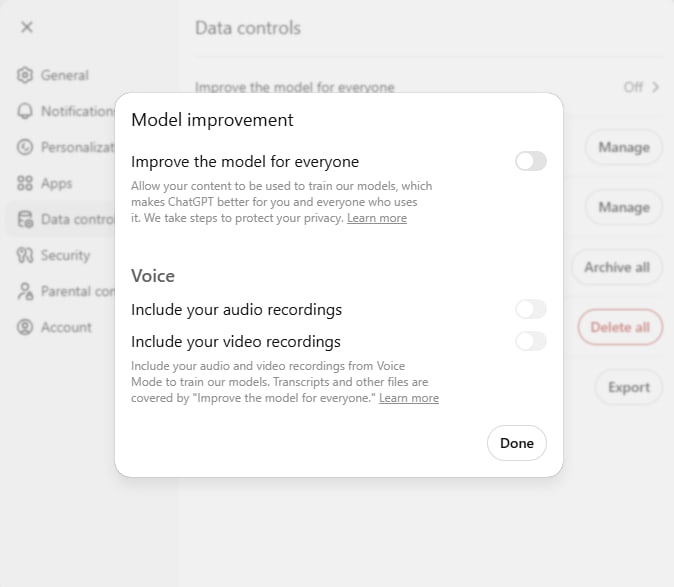

1. Go to Settings → Privacy or Data and disable “Use my data to train models.”

2. Set up automatic deletion of histories after a reasonable period (e.g., 30 days).

3. Review API integrations and block bulk uploads of non-essential information.

4. Enable two-factor authentication and audit third-party app permissions.

5. Centralize logs in a SIEM system and set up alerts for anomalous usage patterns.

Conversational AI and smart search engines offer enormous advantages, but only if we use them wisely. With a simple plan of best practices and basic controls, you can enjoy their benefits without exposing your information. If you want more information, we're here to help.