Artificial intelligence is revolutionizing the way we approach complex problems and make decisions in a variety of areas, from healthcare to business process optimization. For professional developers, it is critical to stay on top of the latest innovations and technologies in AI that enable the creation of more effective and impactful solutions. In this article, we will explore recent enhancements introduced by Microsoft Azure, including innovations in Phi models, the simplified RAG approach to improve question answering, and custom generative AI models that offer greater adaptability to different applications. These tools and advancements enable developers to design and refine AI solutions that are more robust, efficient and specific to the needs of each project.

The Phi-3.5 Series and its Focus on Efficiency and Sustainability

Microsoft has introduced its latest innovation in artificial intelligence with the Phi-3.5 series, a family of small but powerful language models designed to improve performance across a wide range of tasks. This series includes three key models: Phi-3.5-mini-instruct, Phi-3.5-MoE-instruct and Phi-3.5-vision-instruct, which address different types of problems and applications, from code generation and logical or mathematical problem solving to text and image processing for applications such as optical character recognition and graphics analysis.

The Phi-3.5-mini-instruct model is optimized for fast reasoning tasks and has 3.8 billion parameters, allowing it to compete effectively with larger models. The Phi-3.5-MoE-instruct model is the largest model in the series with 42 billion parameters and uses the Mixture of Experts (MoE) architecture to efficiently handle complex tasks in multiple languages. Finally, the Phi-3.5-vision-instruct model is a multimodal model with 4.2 billion parameters, capable of processing both text and images.

These Phi-3.5-series models have the ability to process large amounts of data with a context window of up to 128,000 tokens, allowing them to handle large documents and complex conversations with exceptional consistency and context. They were trained on vast amounts of high-quality data using state-of-the-art technologies and are available under an open-source MIT license to encourage adoption and experimentation by developers.

.jpg?ver=9as8NKMZm4tgRZTd71tFKw%3d%3d)

Quality of Phi-3.5 models versus size in SLM (Small Language Models)

The highlight of Microsoft's strategy with the Phi-3.5 series is its focus on efficiency and sustainability. Despite their smaller size, these models can compete and even outperform larger models on specific tasks, challenging the traditional notion that “bigger is better” in artificial intelligence. This focus on efficiency translates into reduced computational requirements, faster inference times and reduced environmental impact. Microsoft's Phi-3.5 series marks a significant shift in AI development, setting a new industry standard that prioritizes sustainability and affordability without sacrificing performance.

Optimizing AI applications using RAG and Azure

Retrieval-augmented generation (RAG) is an advanced approach in the field of Artificial Intelligence (AI) that optimizes the output of a large linguistic model so that it queries an authoritative knowledge base outside of the training data before generating an answer. This process allows the results of large linguistic models to be improved, making them more relevant, accurate and useful in different contexts without the need to retrain the model.

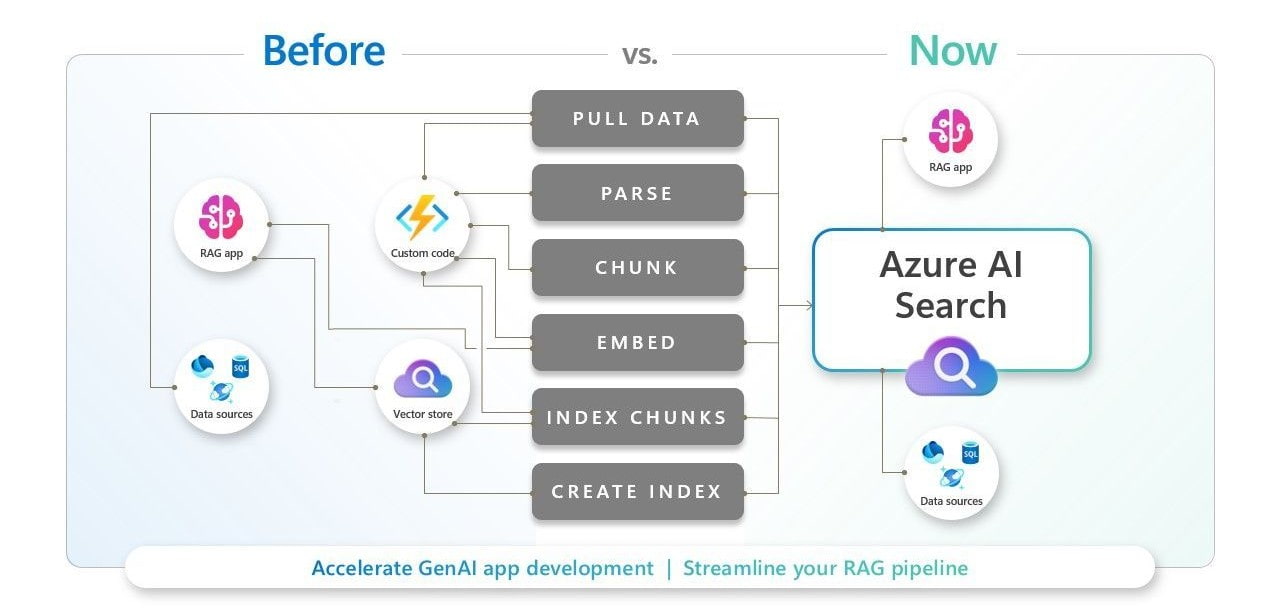

Microsoft is simplifying RAG processes for generative AI applications by integrating data preparation and the use of embeddings into a unified workflow. Vector search in RAG requires significant data preparation, which involves ingesting, parsing, enriching, embedding and indexing data from multiple sources.

To automate and streamline these processes, Microsoft has announced the general availability of integrated vectorization in Azure AI Search. Integrated vectorization enables automatic vector indexing and querying using integrated embedding models, which helps applications realize the full potential of the data.

Integrated Vectorization in Azure AI Search

The vectorization built into Azure AI Search improves developer productivity and enables organizations to deliver RAG systems as out-of-the-box solutions for new projects. This makes it easy to quickly build applications specific to each team's data sets and needs, without having to build a custom implementation every time.

RAG also stands out for its flexibility and adaptability, allowing developers to use this approach in a wide range of applications, from virtual assistants and chatbots to search and recommendation systems. This allows you to work with the Azure and Microsoft environment to create custom solutions specific to your needs and application domains.

In addition, RAG can be easily integrated with other Azure and Microsoft technologies, such as natural language processing, data analytics and business intelligence.

Practical applications of RAG for developers working with Azure and Microsoft include:

1. Virtual assistants and chatbots: RAG can be used to improve the responsiveness and accuracy of virtual assistants and chatbots, providing more accurate and relevant answers to user questions.

2. Search and recommendation systems: RAG can be applied to improve the effectiveness of search and recommendation systems by providing more relevant answers and suggestions to user queries.

3. Document analysis and information extraction: RAG can be used in document analysis and information extraction applications to identify and extract relevant data from multiple sources and documents, using vectorization and indexing integrated into Azure AI Search.

Customized generative models: Creating specific AI solutions

AI generative models are a powerful tool for developers to create application-specific solutions for various applications. Azure has introduced custom generative models that allow developers to tailor AI models to their specific needs and application domains.

These custom generative models can be used in a wide range of applications, such as text, image, audio and video generation. Developers can customize the models to generate relevant, high-quality content tailored to their specific needs and requirements.

By using customized generative models, developers can improve the efficiency and impact of their AI solutions. These models enable the creation of more targeted and relevant applications, delivering an improved user experience and more accurate results.

Recent innovations in Azure, such as the new PHI model, simplified RAG, and custom generative models, provide professional developers with powerful tools to create stronger, more impactful AI solutions. At Intelequia, we strive to stay current with the latest trends and technologies in artificial intelligence, so that our employees can take full advantage of the potential of these tools and continuously improve their skills.

The future of AI is promising, and at Intelequia we already have some solutions that make use of this technology, such as Intelewriter. If you want to learn more about what this tool can offer you, click here. We will continue to explore and share innovations in artificial intelligence, to help developers create increasingly impactful and transformative solutions.